This section covers how accessing data in SAS changed over the years:

First, SAS data storage moved from punch cards to mainframes.

Next, the invention of personal computers (PCs) led to reconfiguring how SAS data was accessed.

Consequently, reading data into SAS from external data files became more common.

In this section, we will discuss how to read data in SAS from an external file, as well as the opportunities and limitations of how SAS processes data.

Upgrading to mainframes

In 1979, Databank of New Zealand adapted SAS to run under IBM's VM/CMS system using IBM's disk operating system (DOS), thus solving the punch card problem and establishing SAS as mainframe software that was remotely hosted. This represented essentially the second rewrite of SAS since its 1976 rewrite. This upgrade made SAS more easily accessible to more customers. It also facilitated the ability for SAS to include more sophisticated components to add onto Base SAS. At the same time, it created new challenges for efficient data input and output (I/O).

In 1979, Barr resigned from SAS, and SAS moved into its current headquarters in Cary, North Carolina (NC). In 1980, SAS added the components SAS/GRAPH for the presentation of graphics, and SAS/ETS for econometric and time series analysis. Prior to SAS/GRAPH, plots were developed using text characters. SAS/GRAPH allowed the output to be displayed as graphics rather than text.

New PROCs added as part of the new SAS/GRAPH component included PROC GCHART and PROC GPLOT. In the current SAS University Edition, these PROCs are no longer available and have been replaced with updated versions. At the time, however, SAS/GRAPH was considered a great improvement in graphical display over what had been available previously.

Note:

For examples of PROC GCHART and PROC GPLOT output, read Mike Kalt and Cynthia Zender's white paper on SAS graphics for the non-statistician (included in the Further reading section).

During the 1980s, SAS as a company grew dramatically; its campus expanded to 18 buildings that included a training center, publications warehouse, and video studio. By the end of the 1980s, SAS had nearly 1,500 worldwide employees and had established new offices on four continents. But as the 1980s wore on, PCs were becoming popular, and customers were demanding a way of running SAS on PCs.

Therefore, SAS had to iterate again in order to keep up with the pace of technological innovation in the background. Even though SAS was now running on mainframes, SAS's effort in innovation had been concentrated on the PROCs, and less attention was paid to optimizing the data management functions provided by the data steps. The response by both the company and SAS was to find ways to optimize the functioning of the SAS system, rather than rebuilding PROCs or data steps.

Transitioning to personal computers

To accommodate PC users, in the 1980s, SAS was rewritten in the C language, which was popular at the time for PC applications. At the same time, SAS developed a new software architecture to run across multiple platforms, which SAS is still known for today. At the time, this PC functionality was introduced as a micro-to-mainframe link, allowing customers to store a dataset on a mainframe while running programs from their PCs.

Having an application running on PCs afforded SAS the opportunity to improve the user experience. SAS developed a graphical user interface (GUI) that resembled the Macintosh and Windows environments that were popular at the time and continued to move away from a numbers-centric format for data display and toward enhanced graphics and visualizations.

Note:

PC SAS does not run on Macintosh computers. But during the 1980s, SAS developed JMP* (pronounced jump), which is a statistical program that can perform many of the same tasks as SAS on macOS.

SAS still had the limitations that its data steps were sequential; so it still read data line by line, just as it had done with punch cards. Data steps were the main functions used to export data out of SAS, and therefore, SAS exported data line by line. This created a lack of flexibility in the format of output files. So, to get around this, the Output Delivery System (ODS) was created. This system allows the user to format output in a variety of formats and is still used currently in SAS today, such as Excel, *.pdf, or *.rtf files, with a specific component for delivering graphics called ODS Graphics.

While this period of SAS's evolution brought many innovations, they were mostly in the area of improving the user experience, rather than focusing on data handling. In terms of the micro-to-mainframe link, the development was mostly focused on the micro rather than the mainframe component. This focus on user experience seemed consistent with SAS's values of putting customers and employees first. During the 1980s, the company was recognized by its customers as helping them make sense out of their vast amount of data and helping them have the results of their data analysis guide their decisions. It also innovated in the area of employee wellness, opening an on-site childcare center in 1981, followed by establishing an on-site fitness center, health care center, and café.

Reading external files

With the movement to PCs, customers wanted to import external data files into local copies of SAS, rather than using CARDS or DATALINES to input data, or connecting to a mainframe data source. This was accomplished through revisions to the INFILE statement, the use of external file references, and the setting of options. Today, SAS has created an automated way to read files using a graphical user interface (GUI) which launches a wizard that creates PROC IMPORT code.

If you are using the University Edition of SAS, you can place the data file for this chapter named Chap_1_1_Infile.csv into your myfolders folder, and if you run the following PROC IMPORT code, the file should be imported into WORK:

%web_drop_table(WORK.IMPORT);

FILENAME REFFILE '/folders/myfolders/Chap 1_1_Infile.csv';

PROC IMPORT DATAFILE=REFFILE

DBMS=CSV

OUT=WORK.IMPORT;

GETNAMES=YES;

RUN;

PROC CONTENTS DATA=WORK.IMPORT;

RUN;

%web_open_table(WORK.IMPORT);

When SAS code is run, it produces a log file. In current SAS applications, the log file opens in a separate window. The log file repeats the code that has been run and includes messages providing feedback, including error messages. It is important to always review the log file to make sure errors and key warnings do not exist, as well as to confirm any assumptions by SAS. Using the point-and-click GUI in SAS University Edition, SAS will create the preceding code and then run it to import the Chap 1_1_Infile.csv file. This is evident because this is the code that is displayed in the log file.

Notice that the code refers to the following items:

An external reference file using the REFFILE command

A connection between the data file being created and the reference file through DATAFILE = REFFILE

The specification that the input file is a comma-separated values (CSV) file through DBMS = CSV

The OUT specification to make SAS output the resulting dataset named IMPORT into WORK through OUT = WORK.IMPORT

The automatic placement of a PROC CONTENTS command to display the contents of the dataset

PROC IMPORT code like the preceding code does not provide the opportunity for a programmer to specify details about how they want the resulting dataset formatted, column by column. This type of specification can be achieved using a series of commands.

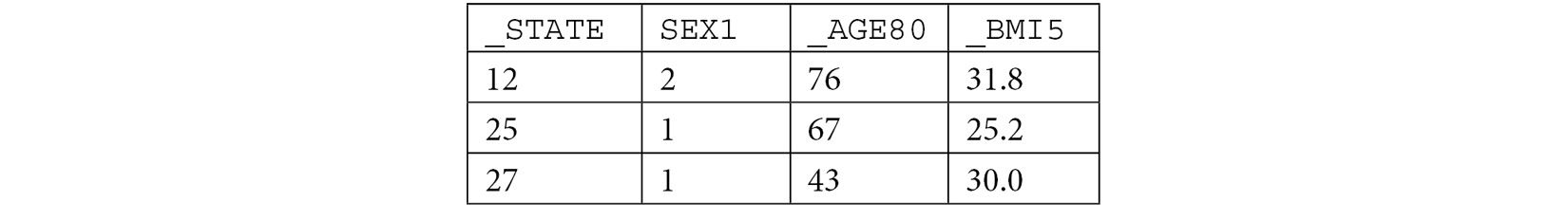

Let's consider the source file, which is Chap 1_1_Infile.csv. This source file has the rows from the BRFSS 2018 dataset for FL, MA, and MN (_STATE equals 12, 25, or 27). It also has these columns: _STATE, SEX1, _AGE80, and _BMI5, which is the respondent's body mass index (BMI) stored as a four-position integer that should have a decimal placed between the second and third integer. The following table displays three records from the source data:

Table 1.2 – Example of four variables from three records of BRFSS source data

Using the following code, we can read in the *.csv file and specify details about the column formats:

data Chap_1_1_Infile;

%let _EFIERR_ = 0;

infile '/folders/myfolders/Chap 1_1_Infile.csv' delimiter = ',' firstobs=2

MISSOVER DSD lrecl=32767;

informat _STATE 2.;

informat SEX1 2.;

informat _AGE80 2.;

informat _BMI5 2.2;

format _STATE 2.;

format SEX1 2.;

format _AGE80 2.;

format _BMI5 4.1;

input

_STATE

SEX1

_AGE80

_BMI5

;

if _ERROR_ then call symputx('_EFIERR_',1);

RUN;

The preceding code provides an opportunity to look at various aspects of the way SAS reads in data using the INFILE statement:

The code opens with a data step specifying the output file to be named Chap_1_1_Infile and placed in WORK.

The %let _EFIERR_ = 0; and if _ERROR_ then call symputx('_EFIERR_',1); commands are used for error handling.

The INFILE command has many options that can be used. The preceding code uses delimiter = ',' to indicate that the source file is comma-delimited, and firstobs=2 to indicate that the values in the first observation are on row 2 (as the column names are in row 1).

The INFORMAT command provides the opportunity to specify the format of the source data being read in. This is important to make sure data is read correctly without losing any information. Notice how _BMI5 is specified at 2.2, meaning two numbers before the decimal, and two numbers after it.

The FORMAT command allows the ability to specify the format of the data output into the Chap_1_1_Infile file. Note that formats do not change how the data is stored, but only control how they are displayed. This can be confusing to new SAS programmers. Notice how _BMI5 is specified at 4.1, so it should result in a variable with four numbers before the decimal and one number after it.

As with when we used CARDS and DATALINES, the INPUT statement signals the point where SAS should start reading in the data, and names each column in order.

The resulting dataset in WORK, named Chap_1_1_Infile, looks like this:

Table 1.3 – Example of the same source data formatted using the FORMAT command

The ability to specify details about importing data was necessary for SAS users to be able to read flat files that were exported out of another system. The INFILE approach with FORMAT and INFORMAT allowed the necessary flexibility in programming to allow conditionals to be placed in code to facilitate SAS reading only parts of the files, and the ability to direct SAS to specific coordinates on raw datafiles and direct it to read those values a certain way. But while using INFILE and related commands increased the flexibility behind the use of big data in SAS (because the data step functioning was still based on the sequential read approach used with the punch cards), there were limited opportunities for the programmer to improve I/O.

Free Chapter

Free Chapter