Compared to other predictive models that you might have used, deep neural networks are very complicated. Consider a network with 100 inputs, two hidden layers with 30 neurons each, and a logistic output layer. That network would have 3,930 learnable parameters as well as the hyperparameters needed for optimization, and that's a very small example. A large convolutional neural network will have hundreds of millions of learnable parameters. All these parameters are what make deep neural networks so amazing at learning structures and patterns. However, this also makes overfitting possible.

Building datasets for deep learning

Bias and variance errors in deep learning

You may be familiar with the so-called bias/variance trade-off in typical predictive models. In case you're not, we'll provide a quick reminder here. With traditional predictive models, there is usually some compromise when we try to find an error from bias and an error from variance. So let's see what these two errors are:

- Bias error: Bias error is the error that is introduced by the model. For example, if you attempted to model a non-linear function with a linear model, your model would be under specified and the bias error would be high.

- Variance error: Variance error is the error that is introduced by randomness in the training data. When we fit our training distribution so well that our model no longer generalizes, we have overfit or introduce a variance error.

In most machine learning applications, we seek to find some compromise that minimizes bias error, while introducing as little variance error as possible. I say most because one of the great things about deep neural networks is that, for the most part, bias and variance can be manipulated independently of one another. However, to do so, we will need to be very careful with how we structure our training data.

The train, val, and test datasets

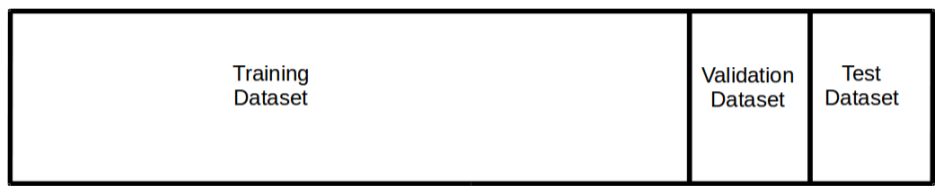

For the rest of the book, I will be structuring my data into three separate sets that I'll refer to as train, val, and test. These three separate datasets, drawn as random samples from the total dataset will be structured and sized approximately like this.

The train dataset will be used for training the network, as expected.

The val dataset, or the validation dataset, will be used to find ideal hyperparameters, and to measure overfitting. At the end of an epoch, which is when the network has has the opportunity to observe every data point in the training set, we will make a prediction on the val set. That prediction will be used to watch for overfitting and will help us know when the network has finished training. Using the val set at the end of each epoch like this somewhat differs from the typical usage. For more information on Hold-Out Validation please reference The Elements of Statistical Learning by Hastie and Tibshirani (https://web.stanford.edu/~hastie/ElemStatLearn/).

The test dataset will be used once all training is complete, to accurately measure model performance on a set of data that the network hasn't seen.

It is very important that the val and test data comes from the same datasets. It is less important that the train dataset matches val and test, although that is still ideal. If image augmentation were being used (performing minor modifications to training images in an attempt to amplify the training set size) for example, the training set distribution may no longer match the val set distribution. This is acceptable and network performance can be adequately measured as long as val and test are from the same distribution.

Managing bias and variance in deep neural networks

Now that we've defined how we will structure data and refreshed ourselves on bias and variance, let's consider how we will control bias and variance errors in our deep neural networks.

- High bias: A network with high bias will have a very high error rate when predicting on the training set. The model is not doing well at fitting the data. In order to reduce the bias you will likely need to change the network architecture. You may need to add layers, neurons, or both. It may be that your problem is better solved using a convolutional or recurrent network.

Of course, sometimes a problem is high bias because of a lack of signal or very difficult problem, so be sure to calibrate your expectations on a reasonable rate (I like to start by calibrating on human accuracy).

- High variance: A network with a low bias error is fitting the training data well; however, if the validation error is greater than the test error the network has begun to overfit the training data. The two best ways to reduce variance are by adding data and adding regularization to the network.

Adding data is straightforward but not always possible. Throughout the book, we will cover regularization techniques as they apply. The most common regularization techniques we will talk about are L2 regularization, dropout, and batch normalization.

K-Fold cross-validation

If you're experienced with machine learning, you may be wondering why I would opt for Hold-Out (train/val/test) validation over K-Fold cross-validation. Training a deep neural network is a very expensive operation, and put very simply, training K of them per set of hyperparameters we'd like to explore is usually not very practical.

We can be somewhat confident that Hold-Out validation does a very good job, given a large enough val and test set. Most of the time, we are hopefully applying deep learning in situations where we have an abundance of data, resulting in an adequate val and test set.

Ultimately, it's up to you. As we will see later, Keras provides a scikit-learn interface that allows Keras models to be integrated into a scikit-learn pipeline. This allows us to perform K-Fold, Stratified K-Fold, and even grid searches with K-Fold. It's both possible and appropriate to sometimes use K-Fold CV in training deep models. That said, for the rest of the book we will focus on the using Hold-Out validation.