Import the digits toy dataset using scikit-learn's datasets package and create a Pandas DataFrame containing the features and target matrices. Use the following code:

from sklearn.datasets import load_digits digits = load_digits() import pandas as pd X = pd.DataFrame(digits.data) Y = pd.DataFrame(digits.target)

The shape of your features and target matrix should be as follows, respectively:

(1797,64) (1797,1)

Choose the appropriate approach for splitting the dataset and split it.

Conventional split approach (60/20/20%)

Using the train_test_split function, split the data into an initial train set and a test set:

from sklearn.model_selection import train_test_split X_new, X_test, Y_new, Y_test = train_test_split(X, Y, test_size=0.2)

The shape of the sets that you created should be as follows:

(1437,64) (360,64) (1437,1) (360,1)

Next, calculate the value of the test_size, which sets the size of the dev set equal to the size of the test set that was created previously:

dev_size = 360/1437

The result of the preceding operation is 0.2505.

Finally, split X_new and Y_new into the final train and dev sets. Use the following code:

X_train, X_dev, Y_train, Y_dev = train_test_split(X_new, Y_new, test_size = 0.25)

The final shape of all sets is shown here:

X_train = (1077,64) X_dev = (360,64) X_test = (360,64) Y_train = (1077,1) Y_dev = (360,1) Y_test = (360,1)

Cross-Validation Approach

Using the train_test_split function, split the data into an initial train set and a test set, just like you did previously:

from sklearn.model_selection import train_test_split X_new_2, X_test_2, Y_new_2, Y_test_2 = train_test_split(X, Y, test_size=0.1)

Using the KFold class, perform a 10-fold split:

from sklearn.model_selection import KFold kf = Kfold(n_splits = 10) splits = kf.split(X_new_2)

Remember that cross-validation performs different configuration of splits, shuffling data each time. Considering this, perform a for loop that will go through all the split configurations:

for train_index, dev_index in splits: X_train_2, X_dev_2 = X_new_2.iloc[train_index], X_new_2.iloc[dev_index] Y_train_2, Y_dev_2 = Y_new_2.iloc[train_index], Y_new_2.iloc[dev_index]

The code in charge of training and evaluating the model should be inside the body of the for loop in order to train and evaluate the model with each configuration of splits.

The final shape of the sets will be as follows:

X_train_2 = (1456,64) X_dev_2 = (161,64) X_test_2 = (180,64) Y_train_2 = (1456,1) Y_dev_2 = (161,1) Y_test_2 = (180,1)

Import the toy dataset boston using scikit-learn's datasets package and create a Pandas DataFrame containing the features and target matrices:

from sklearn.datasets import load_digits data = load_digits() import pandas as pd X = pd.DataFrame(data.data) Y = pd.DataFrame(data.target)

Split the data into training and testing sets. Use 20% as the size of the testing set:

from sklearn.model_selection import train_test_split X_train, X_test, Y_train, Y_test = train_test_split(X,Y, test_size = 0.1, random_state = 0)

Train a decision tree over the train set. Then, use the model to predict the class label over the test set (hint: to train the Decision Tree, revisit Exercise 12):

from sklearn import tree model = tree.DecisionTreeClassifier(random_state = 0) model = model.fit(X_train, Y_train) Y_pred = model.predict(X_test)

Use scikit-learn to construct a confusion matrix:

from sklearn.metrics import confusion_matrix confusion_matrix = confusion_matrix (Y_test, Y_pred)

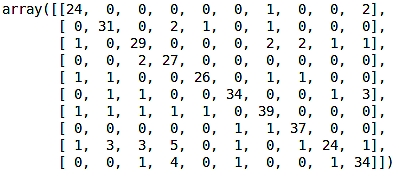

The output of the confusion matrix is shown as follows:

Figure 3.13: Output of the confusion matrix from Activity 9

Calculate the accuracy of the model:

from sklearn.metrics import accuracy_score accuracy_score = accuracy_score(Y_test, Y_pred)

The accuracy is equal to 84.72%.

Calculate the precision and recall. Considering that both the precision and recall can only be calculated over binary data, assume that we are only interested in classifying instances as number 6 or any other number:

Y_test_2 = Y_test[:] Y_test_2[Y_test_2 != 6] = 1 Y_test_2[Y_test_2 == 6] = 0 Y_pred_2 = Y_pred Y_pred_2[Y_pred_2 != 6] = 1 Y_pred_2[Y_pred_2 == 6] = 0 From sklearn.metrics import precision_score, recall_score precision = precision_score(Y_test_2, Y_pred_2) recall = recall_score(Y_test_2, Y_pred_2)

The precision and recall scores should be equal to 98.41% and 98.10%, respectively.

Import the digits toy dataset using scikit-learn's datasets package and create a Pandas DataFrame containing the features and target matrices:

from sklearn.datasets import load_digits data = load_digits() import pandas as pd X = pd.DataFrame(data.data) Y = pd.DataFrame(data.target)

Split the data into training, validation, and testing sets. Use 0.1 as the size of the test set, and an equivalent number to build a validation set of the same shape:

from sklearn.model_selection import train_test_split X_new, X_test, Y_new, Y_test = train_test_split(X, Y, test_size = 0.1, random_state = 101) X_train, X_dev, Y_train, Y_dev = train_test_split(X_new, Y_new, test_size = 0.11, random_state = 101)

Create a train/dev set for both the features and the target values that contains 89 instances/labels of the train set and 89 instances/labels of the dev set:

import numpy as np np.random.seed(101) indices_train = np.random.randint(0, len(X_train), 89) indices_dev = np.random.randint(0, len(X_dev), 89) X_train_dev = pd.concat([X_train.iloc[indices_train,:], X_dev.iloc[indices_dev,:]]) Y_train_dev = pd.concat([Y_train.iloc[indices_train,:], Y_dev.iloc[indices_dev,:]])

Train a decision tree over that training set data:

from sklearn import tree model = tree.DecisionTreeClassifier(random_state = 101) model = model.fit(X_train, Y_train)

Calculate the error rate for all sets of data, and determine which condition is affecting the performance of the model:

from sklearn.metrics import accuracy_score X_sets = [X_train, X_train_dev, X_dev, X_test] Y_sets = [Y_train, Y_train_dev, Y_dev, Y_test] scores = [] for i in range(0, len(X_sets)): pred = model.predict(X_sets[i]) score = accuracy_score(Y_sets[i], pred) scores.append(score)

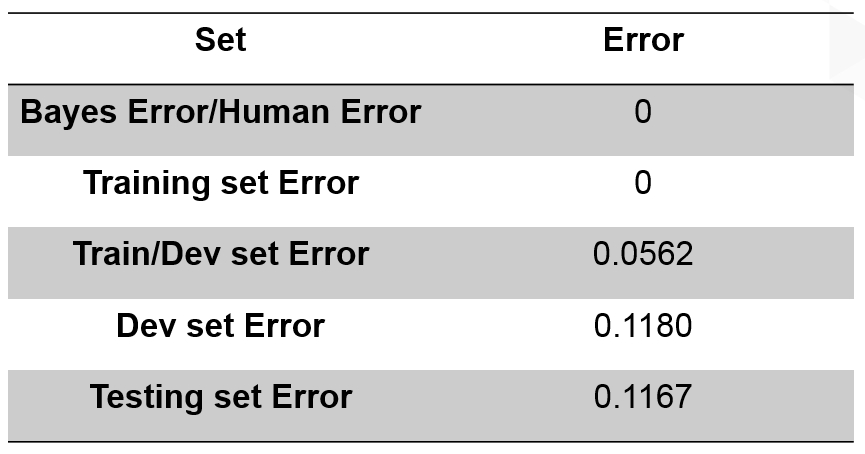

The error rates are shown in the following table:

Figure 3.14: Error rates of the Handwritten Digits model

From the preceding results of the errors, it can be concluded that the model is equally suffering from variance and data mismatch.