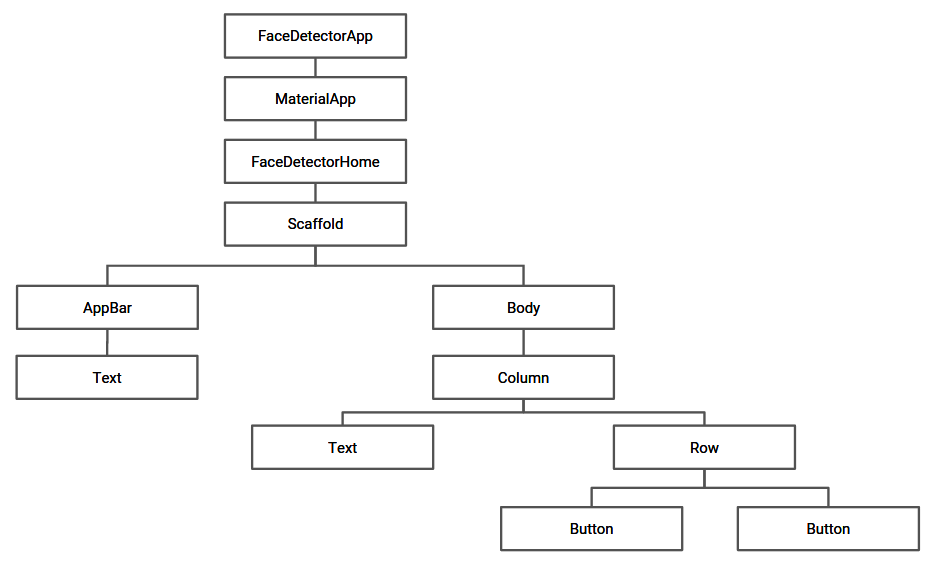

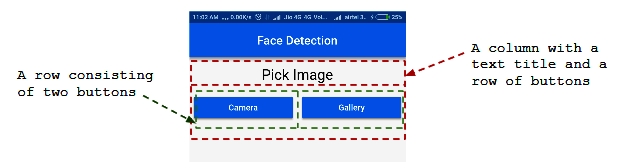

Next is building the row with button widgets. After placing our text title, we will now create a row of two buttons that will enable the user to pick an image either from the gallery or take a new image from the camera. Let's do this in the following steps:

- We start by defining createButton() to create buttons with all the required properties:

Widget createButton(String imgSource) {

return Expanded(

child: Padding(

padding: EdgeInsets.symmetric(horizontal: 8.0),

child: RaisedButton(

color: Colors.blue,

textColor: Colors.white,

splashColor: Colors.blueGrey,

onPressed: () {

onPickImageSelected(imgSource);

},

child: new Text(imgSource)

),

)

);

}

The method returns a widget, that is, RaisedButton, after providing a horizontal padding of 8.0. The color of the button is set to blue and the color of the button text is set to white. splashColor is set to blueGrey to indicate that the button is clicked by producing a rippling effect.

The code snippet inside onPressed is executed when the button is pressed. Here, we make a call to onPickImageSelected(), which is defined in a later section of the chapter. The text that is displayed inside the button is set to imgSource, which, here, can be the gallery or the camera. Additionally, the whole code snippet is wrapped inside Expanded() to make sure that the created button completely occupies all the available space.

- Now we use the buildSelectImageRowWidget() method to build a row with two buttons to list the two image sources:

Widget buildSelectImageRowWidget(BuildContext context) {

return Row(

children: <Widget>[

createButton('Camera'),

createButton('Gallery')

],

);

}

In the preceding code snippet, we call the previously defined createButton() method to add Camera and Gallery as image source buttons and add them to the children widget list for the row.

- Now, let's define onPickImageSelected(). This method uses the image_picker library to direct the user either to the gallery or the camera to get an image:

void onPickImageSelected(String source) async {

var imageSource;

if (source == ‘Camera’) {

imageSource = ImageSource.camera;

} else {

imageSource = ImageSource.gallery;

}

final scaffold = _scaffoldKey.currentState;

try {

final file = await ImagePicker.pickImage(source: imageSource);

if (file == null) {

throw Exception('File is not available');

}

Navigator.push(

context,

new MaterialPageRoute(

builder: (context) => FaceDetectorDetail(file)),

);

} catch (e) {

scaffold.showSnackBar(SnackBar(

content: Text(e.toString()),

));

}

}

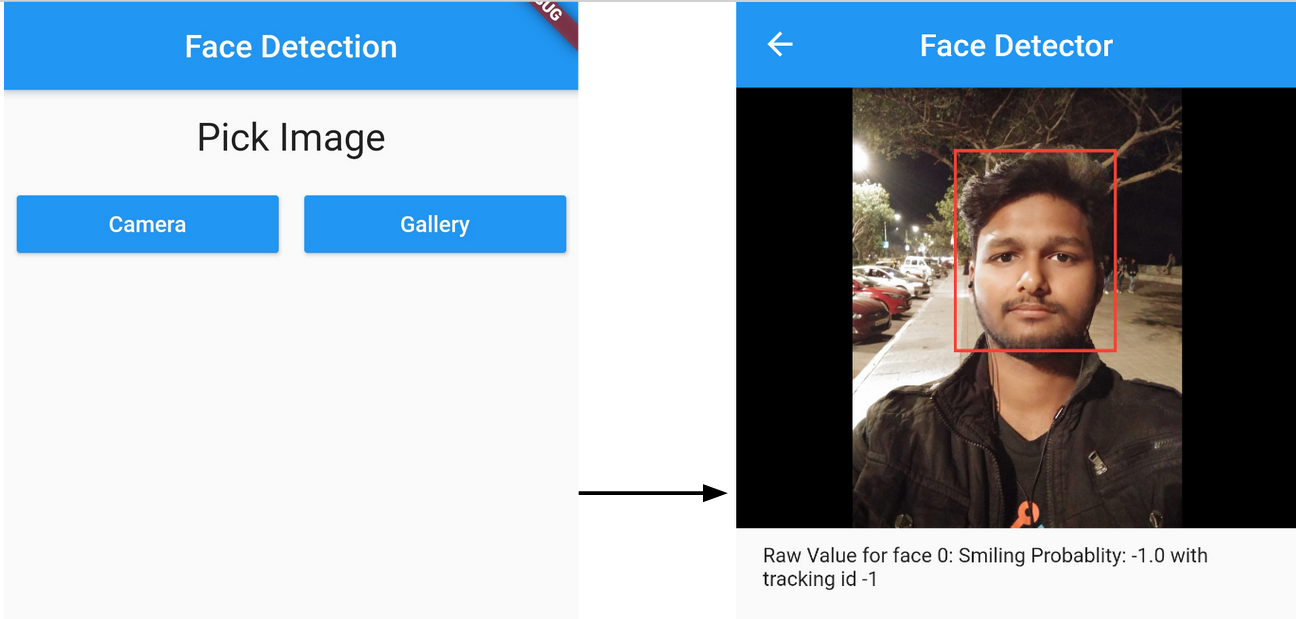

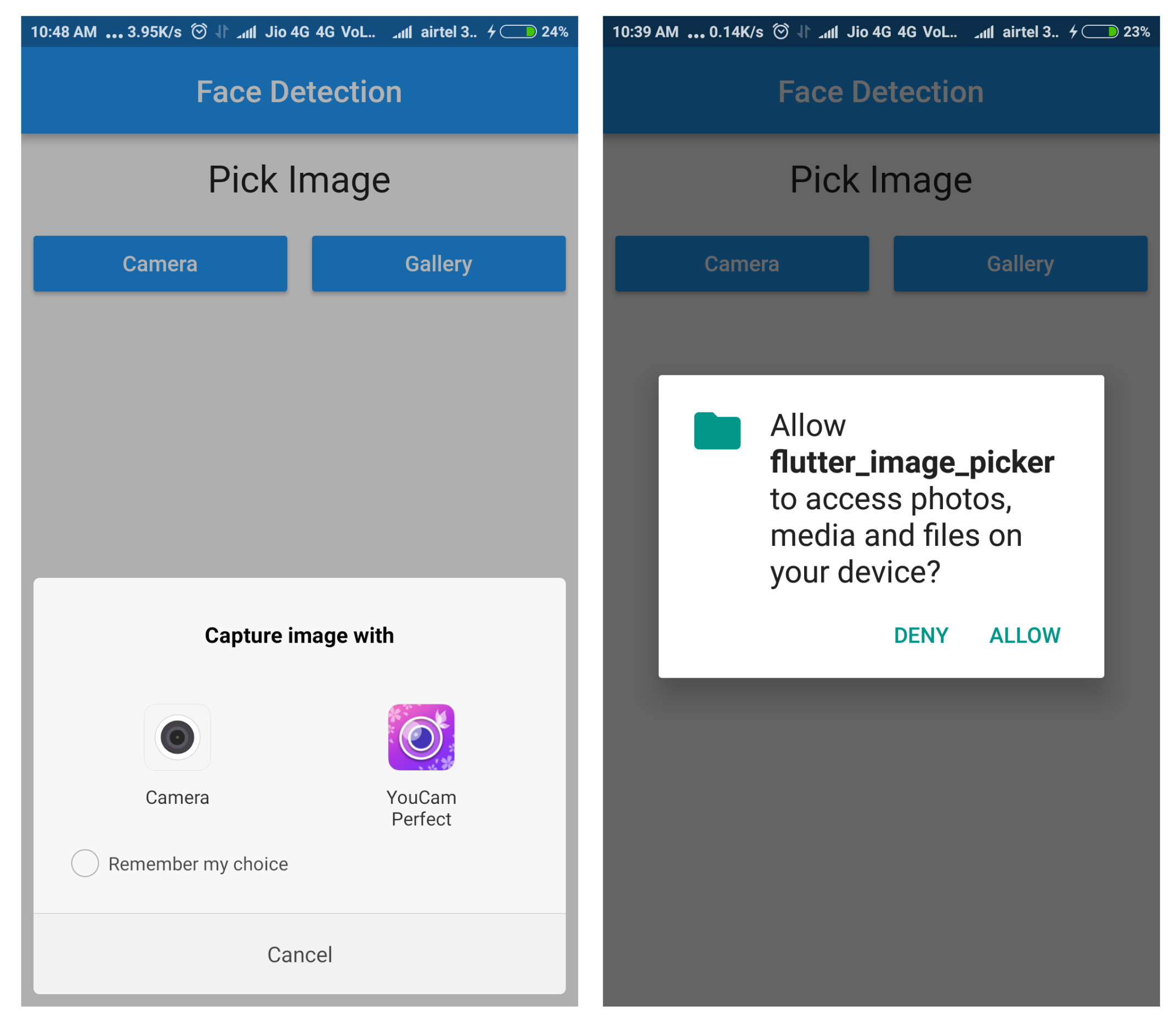

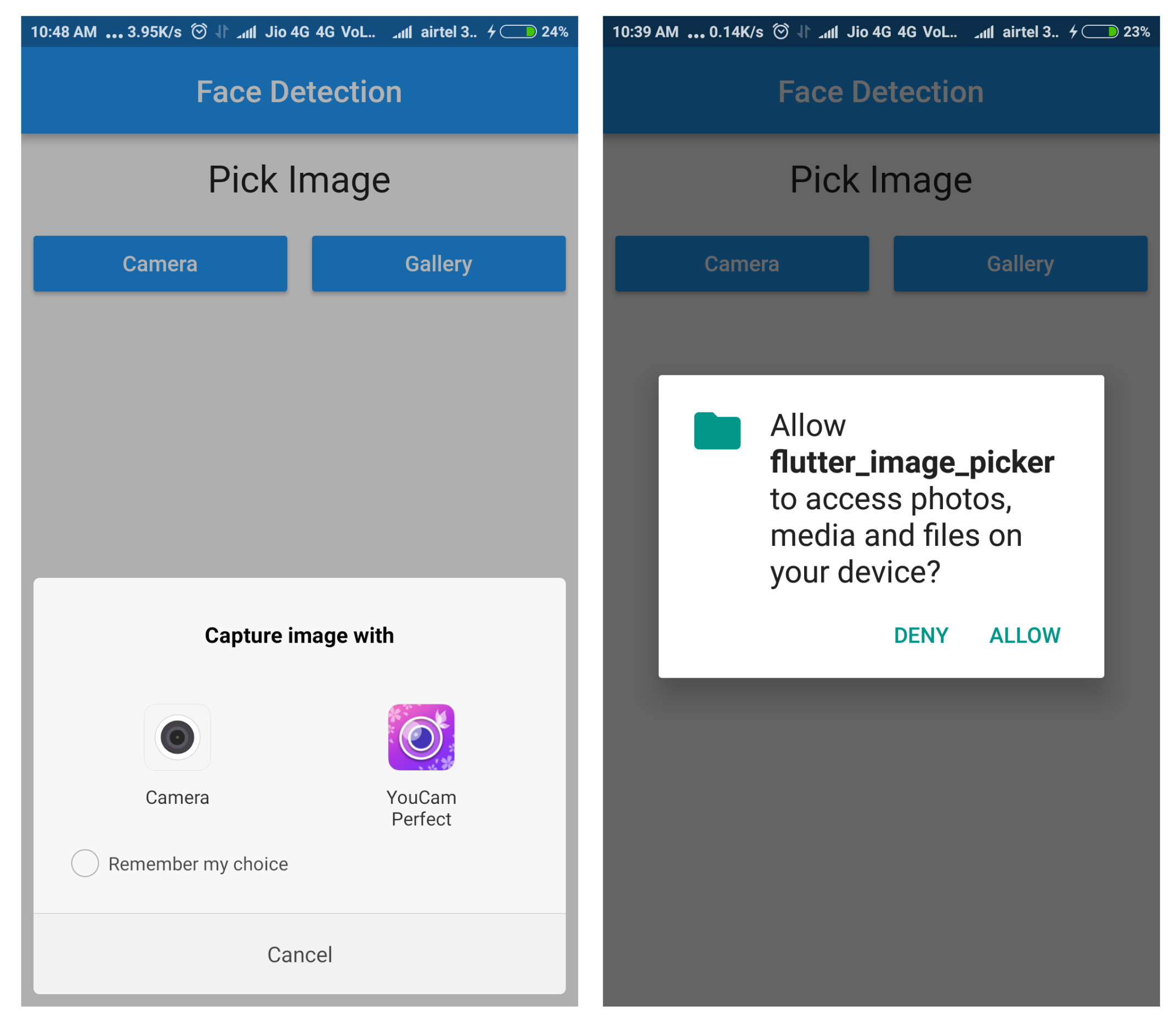

First, imageSource is set to either camera or gallery using an if-else block. If the value passed is Camera, the source of the image file is set to ImageSource.camera; otherwise, it is set to ImageSource.gallery.

Once the source of the image is decided pickImage() is used to pick the correct imageSource. If the source was Camera, the user will be directed to the camera to take an image; otherwise, they will be directed to choose an image from the gallery.

To handle the exception if the image was not returned successfully by pickImage(), the call to the method is enclosed inside a try-catch block. If an exception occurs, the execution is directed to the catch block and a snackbar with an error message being shown on the screen by making a call to showSnackBar():

After the image has been chosen successfully and the file variable has the required uri, the user migrates to the next screen, FaceDetectorDetail, which is discussed in the section, Creating the second screen, and using Navigator.push() it passes the current context and the chosen file into the constructor. On the FaceDetectorDetail screen, we populate the image holder with the selected image and show details about the detected faces.