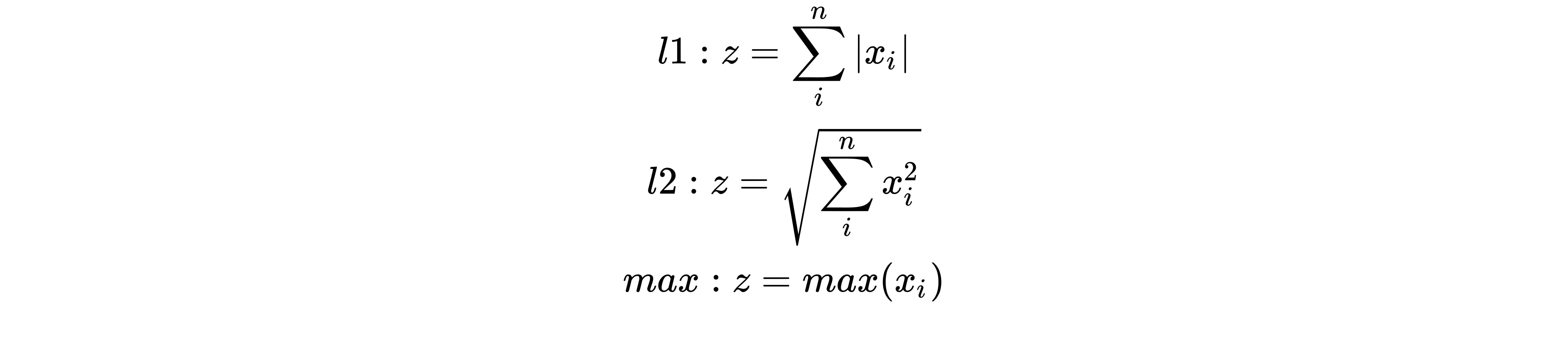

Data normalization is used when you want to adjust the values in the feature vector so that they can be measured on a common scale. One of the most common forms of normalization that is used in machine learning adjusts the values of a feature vector so that they sum up to 1.

-

Book Overview & Buying

-

Table Of Contents

Python Machine Learning Cookbook - Second Edition

By :

Python Machine Learning Cookbook

By:

Overview of this book

Free Chapter

Free Chapter