A neural network from scratch in Python

To gain a better understanding of how NNs work, we will formulate the single-layer architecture and forward propagation computations displayed in Figure 17.2 using matrix algebra and implement it using NumPy. You can find the code samples in the notebook build_and_train_feedforward_nn.

The input layer

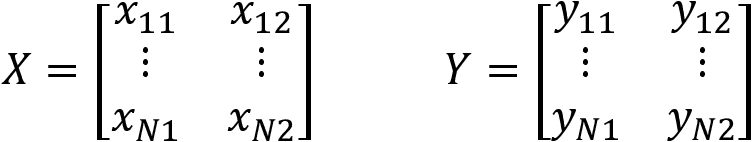

The architecture shown in Figure 17.2 is designed for two-dimensional input data X that represents two different classes Y. In matrix form, both X and Y are of shape  :

:

We will generate 50,000 random binary samples in the form of two concentric circles with different radius using scikit-learn's make_circles function so that the classes are not linearly separable:

N = 50000

factor = 0.1

noise = 0.1

X, y = make_circles(n_samples=N, shuffle=True,

factor=factor, noise=noise)

We then convert the one-dimensional output into a two-dimensional array:

Y = np.zeros((N, 2))

for c in [0, 1]:

Y[y == c...